Teenage Instagram users will get new privacy settings, its parent company Meta has announced in a major new update.

It is an attempt by Meta, which also owns WhatsApp and Facebook, to reduce the amount of harmful content seen online by young people.

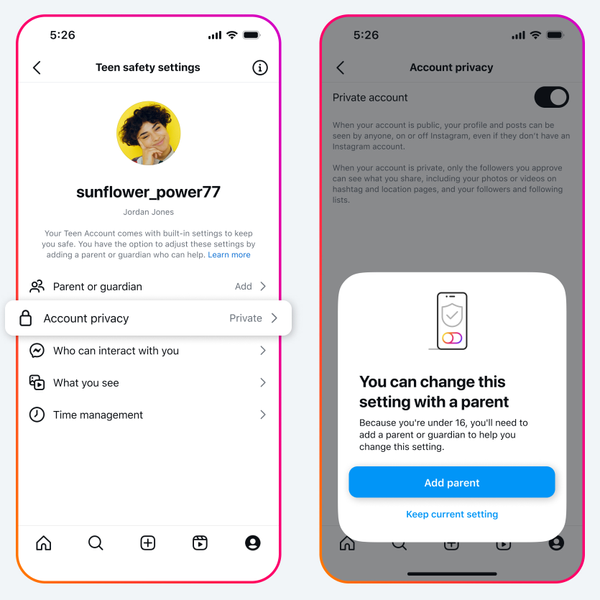

Instagram allows 13-year-olds and above to sign up but after the privacy changes, all designated accounts will be turned into teen accounts automatically, which will be private by default.

Those accounts can only be messaged and tagged by accounts they follow or are already connected to, and sensitive content settings will be the most restrictive available.

Offensive words and phrases will be filtered out of comments and direct message requests, and the teenagers will get notifications telling them to leave the app after 60 minutes each day.

Sleep mode will also be turned on between 10pm and 7am, which will mute notifications overnight and send auto-replies to DMs.

Users under 16 years of age will only be able to change the default settings with a parent's permission.

But 16 and 17-year-olds will be able to turn off the settings without parental permission.

Parents will also get a suite of settings to monitor who their children are engaging with and limit their use of the app.

Meta said it will place the identified users into teen accounts within 60 days in the US, UK, Canada and Australia, and in the European Union later this year.

The rest of the world will see the accounts rolled out from January.

Ofcom, the UK's communications regulator, called the changes "a step in the right direction" but said platforms will have to do "far more to protect their users, especially children" when the Online Safety Act starts coming into force early next year.

"We won't hesitate to take action, using the full extent of our enforcement powers, against any companies that come up short," said Richard Wronka, online safety supervision director at Ofcom.

More from Sky News:

Meta bans Russian state media from Facebook and Instagram

Three mpox scenarios the UK is preparing for

Final messages from Titan submersible crew

Meta has faced multiple lawsuits over how young people are treated by its apps, with some claiming the technology is intentionally addictive and harmful.

Others have called on Meta to address how its algorithm works, including Ian Russell, the father of teenager Molly Russell, who died after viewing posts related to suicide, depression and anxiety online.

"Just as Molly was overwhelmed by the volume of the dangerous content that bombarded her, we've found evidence of algorithms pushing out harmful content to literally millions of young people," said Mr Russell last year, who is chair of trustees at the Molly Rose Foundation.

Keep up with all the latest news from the UK and around the world by following Sky News

Tap hereMeta said the new restrictions on accounts are "designed to better support parents, and give them peace of mind that their teens are safe with the right protections in place".

It also acknowledged that teenagers may try to lie about their age to circumvent restrictions, and said that it is "building technology to proactively find accounts belonging to teens, even if the account lists an adult birthday".

That technology will begin testing in the US early next year.

Disclaimer: The copyright of this article belongs to the original author. Reposting this article is solely for the purpose of information dissemination and does not constitute any investment advice. If there is any infringement, please contact us immediately. We will make corrections or deletions as necessary. Thank you.